Vol. 43 No.1 - Highlights

A LHC first: proton-proton cross-section at 7 TeV (Vol. 43 No. 1)

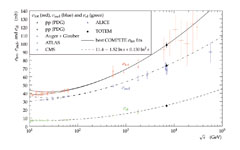

The TOTEM measurement of the total cross-section of the proton at the 7 TeV centre-of-mass energy of 98 ± 3 mbarn is shown together with results from previous measurements and from cosmic rays.

The TOTEM measurement of the total cross-section of the proton at the 7 TeV centre-of-mass energy of 98 ± 3 mbarn is shown together with results from previous measurements and from cosmic rays.

The proton, one of the basic building blocks of the atomic nuclei, is a dynamic and complex system: its sub-components and their interactions keep it together in a very dynamic way. The inner structure of protons can be studied by observing how they interact with each other, which implies measuring the total cross-section of the proton-proton interactions. To measure it, the TOTEM experiment uses the fact that the total cross-section can be related to the elastic forward scattering amplitude.

Due to the tiny scattering angles the protons have to be measured very close to the CERN LHC beams, requiring custom-designed silicon detectors with full efficiency up to the physical edge. The measurement was performed in a dedicated run with special beam optics that made the angular beam spread in the interaction point small compared to the scattering angles.

The TOTEM experiment has confirmed the increase with energy of the proton-proton total cross-section by a (98± 3) mbarn result at the so far unexplored energy of the LHC. This phenomenon was expected from previous measurements performed at energies 100 times smaller at the CERN ISR in 1972. It is remarkable that the early indirect cosmic ray measurements are in good agreement with the new precise TOTEM value.

First measurement of the total proton-proton cross section at the LHC energy of Vs =7 TeV

The TOTEM collaboration: G. Antchev et al. (73 co-authors), EPL, 96, 21002 (2011)

[Abstract]

Quantitative strain mapping at the nanometer scale (Vol. 43 No. 1)

(a) Scheme and (b) dark-field (004) electron hologram of a Si/Si0.79Ge0.21 superlattice. (c) Phase of the hologram. (d) Strain map.

(a) Scheme and (b) dark-field (004) electron hologram of a Si/Si0.79Ge0.21 superlattice. (c) Phase of the hologram. (d) Strain map.

As strain is now used routinely in transistor devices to increase the mobility of the charge carriers, the microelectronics industry needs ways to map the strain with nanometer resolution. Recently, a powerful TEM (transmission electron microscopy) based technique called dark-field electron holography has been invented by Martin Hÿtch at CEMES in Toulouse. To map the strain, it is necessary to thin a sample to electron transparency using a focused ion beam tool. Then, to form a dark-field electron hologram, electron beams that have been diffracted by both the region of interest (a device, a layer) and a region of reference (usually the substrate) are interfered using an electron biprism.

When grown on a Si substrate by epitaxy, SiGe layers are tensily strained in the growth direction as shown in Figure (a). Due to the presence of strain, variations of the hologram fringe spacing can be seen (b). Using Fourier space processing, the phase of the electrons can be retrieved from the hologram (c), and by taking the gradient of the phase, the strain map can then be calculated (d).

As the technique is quantitative, one can directly correlate the results with simulations to get information about the composition in the layers. As an example, we have investigated the variation of the substitutional carbon content in annealed Si/SiGeC superlattices. Carbon is used to control the strain and avoid plastic relaxation. However during annealing, the formation of Β-SiC clusters reduces the effect of the C atoms. By combining holography and finite element simulation, we have shown that after annealing at 1050°C, a SiGeC structure behaves like pure SiGe from the point of view of strain.

The reduction of the substitutional C content in annealed Si/SiGeC superlattices studied by dark-field electron holography

T. Denneulin, J. L. Rouvière, A. Béché, M. Py, J. P. Barnes, N. Rochat, J. M. Hartmann and D. Cooper, Semicond. Sci. Technol. 26, 125010 (2011)

[Abstract]

Vector Correlators in Lattice QCD (Vol. 43 No. 1)

Comparison of the vacuum polarisation calculated from e+e- annilhilation cross section with recent lattice simulations.

Comparison of the vacuum polarisation calculated from e+e- annilhilation cross section with recent lattice simulations.

Vacuum polarisation, the modification of the photon propagator due to virtual electron-positron pairs, is one of the first quantum loop corrections encountered in field theory. In both QED and QCD it causes the running of the appropriate fine structure constant as the physical scale is varied, and also corrects the magnetic moments of electrons and muons from the value 2 predicted by the Dirac equation. For scales below a few GeV the QCD vacuum polarisation cannot be calculated perturbatively, but can be accessed via the optical theorem from the annihilation cross section of e+e- into hadrons, which is simply related to the spectral density ρ(s) in the vector isoscalar channel.. This paper opens a new direction by first assessing the current state-of-the-art in calculating the vacuum polarisation in lattice QCD, the most systematic non-perturbative approach, and then by setting out two different routes to improving on this, and identifying applications to strong interaction phenomenology.

Comparison with experimental data reveals that current results are badly finite-volume affected. The paper provides technical details enabling these distortions to be understood and ultimately extrapolated to the large volume limit. It also uses the same data to estimate the current-current correlator as a function of Euclidean time exposing the possibility that different ranges are amenable to different theoretical approaches; the dominant hadronic correction to (g-2) for the muon, about to be measured with unprecedented precision at Fermilab, comes from the range 0.5fm<ct<1.5fm. This reasoning is also suggests a new QCD reference scale, to help callibrate the lattice spacing using high-precision numerical estimates of the vector correlator.

Vector Correlators in Lattice QCD: Methods and Applications

D. Bernecker and H. Meyer, Eur. Phys. J. A, 47 11, 1 (2011)

[Abstract]

Leidenfrost propulsion on a ratchet (Vol. 43 No. 1)

Levitating Leidenfrost drop on a hot ratchet: a force acts on the drop,

which deflects a fiber plunging in it.

Levitating Leidenfrost drop on a hot ratchet: a force acts on the drop,

which deflects a fiber plunging in it.

The Leidenfrost phenomenon is observed when depositing liquids on solids much hotter than their boiling point. Liquids then levitate on a cushion of their own vapour, and slowly evaporate without boiling, due to the absence of contact with the substrate. The vapour cushion also makes liquids ultra-mobile, and Linke discovered in 2006 that Leidenfrost drops on a hot ratchet self-propel, in the direction of "climbing" the teeth steps. The corresponding forces were found to be 10 to 100 µN, much smaller than the liquid weight, yet enough to generate velocities of order 10 cm/s.

The origin of the motion was not really clear, despite stimulating propositions in Linke's original paper. As a first step, it was reported in 2011 by Lagubeau et al. that disks of sublimating dry ice also levitate and self-propel on hot ratchets: liquid deformations are not responsible for the motion. However, the levitating object in all these experiments squeezes the vapour below, and the resulting flow might be rectified by the asymmetric profile of the ratchet. The key question was not only to check this assumption, but also to determine in which privileged direction the vapour flows. By tracking tiny glass beads in the vapour, it was shown that rectification indeed takes place, along the descending slope of the teeth - the vapour escaping laterally once reaching the step of the teeth. Hence the levitating body is entrained by the viscous drag arising from this directional vapour flow. Goldstein et al. reached a similar conclusion in a paper to appear in the Journal of Fluid Mechanics. Many questions however remain: ratchets also generate special frictions (the liquid hits the teeth as it progresses), and the optimal ratchet (maximizing the speed of these little hovercrafts) has not yet been designed.

Viscous mechanism for Leidenfrost propulsion on a ratchet

G. Dupeux, M. Le Merrer, G. Lagubeau, C. Clanet, S. Hardt and D. Quéré, EPL, 96, 58001 (2011)

[Abstract]

Yellow-green and amber InGaN micro-LED arrays (Vol. 43 No. 1)

Longer wavelength InGaN emitters (~600nm) are important for some potential applications such as optoelectronic tweezers and visible light communication. The primary obstacle for developing InGaN light-emitting diodes (LEDs) at longer wavelengths, however, is because it is difficult to incorporate a high indium composition (for extending the emission wavelength) while maintaining good epitaxial quality. Indium in high proportion tends to aggregate. High indium InGaN structures also show strong piezoelectric fields, which in turn induce a reduced wavefunction overlap between electrons and holes, and a consequently weakened emission. To overcome these effects, new epitaxial InGaN structures of high-indium content are grown, in which an electron reservoir layer is introduced to enhance the indium incorporation and the light emission, but retain conventional (1000) orientation.

Photoluminescence measurements reveal that the emission wavelengths of these high-In quantum well structures can be tuned from 560nm to 600nm, depending on actual indium composition. Yellow-green and amber devices in an array-format are developed based on these wafer structures, where each LED pixel can be individually addressable. Power measurements indicate that the power density of the yellow-green (amber) device per pixel is up to 8W/cm2 (4.4 W/cm2), much higher than that of conventional broad-sized LEDs made under the same condition, and nearly an order higher than that required by optoelectronic tweezers, validating the feasibility of using these micro-LEDs for tweezing. Nevertheless, it is found that the emission wavelength is strongly blueshifted upon injection current increase, up to ~50nm. Numerical simulations reveal that this is caused by screening of the quantum Stark effect and a band filling effect, thus further optimisation of the growth conditions and epitaxial structures is needed.

Electrical, spectral and optical performance of yellow-green and amber micro-pixelated InGaN light-emitting diodes

Z. Gong, N.Y. Liu, Y.B. Tao, D. Massoubre, E.Y. Xie, X.D. Hu, Z.Z. Chen, G.Y. Zhang, Y.B. Pan, M.S. Hao, I.M. Watson, E. Gu and M.D. Dawson, Semicond. Sci. Technol. 27, 015003 (2012)

[Abstract]

Effects of electric field-induced versus conventional heating (Vol. 43 No. 1)

Study of mobile phone electric field shown no extraordinary heating

Study of mobile phone electric field shown no extraordinary heating

The effect of microwave heating and cell phone radiation on sample material is no different than a temperature increase, according to the present work.

Richert and coworkers attempted for the first time to systematically quantify the difference between microwave-induced heating and conventional heating using a hotplate or an oil-bath, with thin liquid glycerol samples. The authors measured molecular mobility and reactivity changes induced by electric fields in these samples, which can be gauged by what is known as configurational temperature. They realised that thin samples exposed to low-frequency electric field heating can have a considerably higher mobility and reactivity than samples exposed to standard heating, even if they are exactly at the same temperature. They also found that at frequencies exceeding several megahertz and for samples thicker than one millimetre, the type of heating does not have a significant impact on the level of molecular mobility and reactivity, which is mainly dependent on the sample temperature. Actually, the configurational temperatures are only marginally higher than the real measurable one.

Previous studies were mostly fundamental in nature and did not establish a connection between microwaves and mobile phone heating effects. These findings imply that for heating with microwave or cell phone radiation operating in the gigahertz frequency range, no other effect than a temperature increase should be expected.

Since the results are based on averaged temperatures, future work will be required to quantify local overheating, which can, for example, occur in biological tissue subjected to a microwave field, and better assess the risks linked to using both microwaves and mobile phones.

Heating liquid dielectrics by time dependent fields

A. Khalife, U. Pathak and R. Richert, Eur. Phys. J. B 83, 429-435 (2011)

[Abstract]

Ordered Si oxide nanodots at atmospheric pressure (Vol. 43 No. 1)

SEM image of the surface morphology of SiOxHyCz nano-islands localized at the center of nano-indents

SEM image of the surface morphology of SiOxHyCz nano-islands localized at the center of nano-indents

It is now possible to simultaneously create highly reproductive three-dimensional silicon oxide nanodots on micrometric scale silicon films in only a few seconds. The present study shows that one can create a square array of such nanodots, using regularly spaced nanoindents on the deposition layer, that could ultimately find applications as biosensors for genomics or bio-diagnostics.

A process called atmospheric pressure plasma-enhanced chemical vapour deposition is used. This approach is a much faster alternative to methods such as nanoscale lithography, which only permits the deposition of one nanodot at a time. It also allows the growth of a well- ordered array of nanodots, which is not the case of many growth processes. In addition, it can be carried out at atmospheric pressure, which decreases its costs compared to low-pressure deposition processes.

One goal was to understand the self-organization mechanisms leading to a preferential deposition of the nanodots in the indents. By varying the indents' interspacing, they made it comparable to the average distance travelled by the silicon oxide particles of the deposited material. Thus, by adapting both the indents' spacing and the silicon substrate temperature, they observed optimum self-ordering inside the indents using atomic force microscopy.

The next step will be to investigate how such nanoarrays could be used as nanosensors. It is planed to develop similar square arrays on metallic substrates in order to better control the driving forces producing the highly ordered self-organisation of nanodots. Further research will be needed to give sensing ability to individual nanodots by associating them with probe molecules designed to recognise target molecules to be detected.

Ordering of SiOxHyCz islands deposited by atmospheric pressure microwave plasma torch on Si(100) substrates patterned by nanoindentation

X. Landreau, B. Lanfant, T. Merle, E. Laborde, C. Dublanche-Tixier and P. Tristant, Eur. Phys. J. D, 65/3, 421 (2011)

[Abstract]

How to build doughnuts with Lego blocks (Vol. 43 No. 1)

A mix of two chemically discordant polymers with strong bonds creates previously unseen nanoscale assemblies

A mix of two chemically discordant polymers with strong bonds creates previously unseen nanoscale assemblies

The present work reveals how nature minimises energy costs in rings of liquids with an internal nanostructure made of two chemically discordant polymers joined with strong bonds, or di-blocks, deposited on a silicon surface.

The authors first created rings of di-block polymers that they liken to building doughnuts from Lego blocks due to the nature of the material used, which has an internal structure discretised like Lego blocks, resulting in rings approximating the seamless shape of a doughnut (see photo).

The dynamics of interacting edges in ring structures that display asymmetric steps, i.e., different spacing inside and outside the ring, when initially created, has been measured. It is found that the interaction shaping the ring over time is the repulsion between edges. The source of this repulsion is intuitive: an edge is a defect which perturbs the surface profile with an associated cost to the surface energy.

These edges could be considered as defects in a material with an otherwise perfect order at the nanoscale. Thus, research based on the elucidation of defect interactions could help scientists trying to eliminate such defects by understanding how these materials self-assemble. Such systems could also provide an ideal basis for creating patterns on the nanoscale, data storage, and nanoelectronics.

Dynamics of interacting edge defects in copolymer lamellae

J. D. McGraw, I. D. W. Rowe, M. W. Matsen and K. Dalnoki-Veress, Eur. Phys. J. E, 34, 131 (2011)

[Abstract]

How Ni-Ti nanoparticles go back to their memorised shape (Vol. 43 No. 1)

Can metals remember their shape at nanoscale, too?

Can metals remember their shape at nanoscale, too?

Metallic alloys can be stretched or compressed in such a way that they stay deformed once the strain on the material has been released. Only shape memory alloys, however, can return to their original shape after being heated above a specific temperature. For the first time, the authors determine the absolute values of temperatures at which shape memory nanospheres start changing back to their memorised shape - undergoing so-called structural phase transition, which depends on the size of the particles. To achieve this result, they performed a computer simulation using nanoparticles with diameters between 4 and 17 nm made of an alloy of equal proportions of nickel and titanium.

Using a computerised method known as molecular dynamics simulation, it is possible to visualise the transformation process of the material during the transition. As the temperature increases, it is shown that the material's atomic-scale crystal structure shifts from a lower to a higher level of symmetry. The strong influence of the energy difference between the low- and high-symmetry structure at the surface of the nanoparticle, which differs from that in its interior, explains the transition.

Most of the prior work on shape memory materials was in macroscopic scale systems. Potential new applications include the creation of nanoswitches, where laser irradiation could heat up such shape memory material, triggering a change in its length that would, in turn, function as a switch.

Simulation of the thermally induced austenitic phase transition in NiTi nanoparticles

D. Mutter and P. Nielaba, Eur. Phys. J. B 84, 109 (2011)

[Abstract]

First quantum machine to produce four clones (Vol. 43 No. 1)

These intertwinned rays of light are an artistic metaphore for quantum cloning

These intertwinned rays of light are an artistic metaphore for quantum cloning

This article presents a theory for a quantum cloning machine able to produce several copies of the state of a particle at atomic or sub-atomic scale, or quantum state. It could have implications for quantum information processing methods used, for example, in message encryption systems.

Quantum cloning is difficult because quantum mechanics laws only allow for an approximate copy-not an exact copy-of an original quantum state to be made, as measuring such a state prior to its cloning would alter it. The present work shows that it is theoretically possible to create four approximate copies of an initial quantum state, in a process called asymmetric cloning. The authors have extended previous work that was limited to quantum cloning providing only two or three copies of the original state. One key challenge was that the quality of the approximate copy decreases as the number of copies increases. It appears possible to optimise the quality of the cloned copies, thus yielding four good approximations of the initial quantum state. The present quantum cloning machine is shown to have the advantage of being universal and therefore able to work with any quantum state, ranging from a photon to an atom.

Asymetric quantum cloning has applications in analysing the security of messages encryption systems, based on shared secret quantum keys. Two people will know whether their communication is secure by analysing the quality of each copy of their secret key. Any third party trying to gain knowledge of that key would be detected as measuring it would disturb the state of that key.

Optimal asymmetric 1 4 quantum cloning in arbitrary dimension

X.J. Ren, Y. Xiang and H. Fan, Eur. Phys. J. D (2011)

[Abstract]

Energy transport for biomolecular networks (Vol. 43 No. 1)

Probability density of the average energy transfer time τ as a function of the de-phasing rate γ. As indicated by the median (white line), for a typical configuration, τ is reduced by the de-phasing up to an optimal rate γ, where the transfer becomes most efficient. However, these noise-assisted transfer times are longer than the minimum transfer time achieved by an optimized configuration for vanishing de-phasing (minimum of dot-dashed line on the left).

Probability density of the average energy transfer time τ as a function of the de-phasing rate γ. As indicated by the median (white line), for a typical configuration, τ is reduced by the de-phasing up to an optimal rate γ, where the transfer becomes most efficient. However, these noise-assisted transfer times are longer than the minimum transfer time achieved by an optimized configuration for vanishing de-phasing (minimum of dot-dashed line on the left).

Recent experimental demonstrations of long-lived quantum coherence in certain photosynthetic light-harvesting structures have launched a flurry of controversy over the role of coherence in biological function. An ongoing investigation into the astonishingly high-energy transport efficiency of these structures suggests that nature takes advantage of quantum coherent dynamics.

We inquire on the fundamental principles of quantum coherent energy transport in ensembles of spatially disordered molecular networks subjected to dephasing noise. De-phasing reduces the coherence between individual network nodes and has already been shown to assist transport substantially provided that quantum coherence is disadvantageous by reason of destructive interference, e.g. in the presence of disorder and quantum localization. In a statistical survey, we map the probability landscape of transport efficiency for the whole ensemble of disordered networks, in search of specially adapted molecular conformations that nature may select in order to facilitate energy transport: We thus find certain optimal molecular configurations that by virtue of constructive quantum interference yield the highest transport efficiencies in the absence of dephasing noise. Moreover, the transport efficiencies realized by these optimal configurations are systematically higher than the noise-assisted efficiencies mentioned above. As discussed in the article, this defines a clear incentive to select configurations for which quantum coherence can be harnessed.

The optimization topography of exciton transport

T. Scholak, T. Wellens and A. Buchleitner, EPL, 96, 10001 (2011)

[Abstract]

Double ionization with absorbers (Vol. 43 No. 1)

The system is initially described by a two-particle wave function (top). As a particle is ionized and subsequently hits the absorber (Τ), the remaining electron is described by a one-particle density matrix (middle), which may be thought of as an ensemble of several one-particle wave functions. As also the one-particle system may be absorbed, the vacuum state may also be populated (bottom).

The system is initially described by a two-particle wave function (top). As a particle is ionized and subsequently hits the absorber (Τ), the remaining electron is described by a one-particle density matrix (middle), which may be thought of as an ensemble of several one-particle wave functions. As also the one-particle system may be absorbed, the vacuum state may also be populated (bottom).

In quantum dynamics, unbound systems, such as atoms being ionised, are typically very costly to describe numerically as their extension is not limited. This problem should be reduced if one could settle for a description of the remainder of the system and disregard the escaping particles. Removing the escaping particles may be achieved by introducing absorbers close to the boundary of the numerical grid. The problem is, however, that when such "interactions" are combined with the Schrödinger equation, all information about the system is lost as a particle is absorbed. Thus, if we wish to still describe the remaining particles, a generalization of the formalism is called for. As it turns out, this generalisation is provided by the Lindblad equation.

This generalised formalism has been applied to calculate two-photon double ionisation probabilities for a model helium atom exposed to laser fields. In the simulations, the remaining electron was reconstructed as the first electron was absorbed. Since there was a finite probability for also the second electron to hit the absorber at some point, the system could, with a certain probability, end up in the vacuum state, i.e. the state with no particles. As this probability was seen to converge, it was interpreted as the probability of double ionisation.

The validity of this approach was verified by comparing its predictions with those of a more conventional method applying a large numerical grid.

A master equation approach to double ionization of helium

S. Selstø, T. Birkeland, S. Kvaal, R. Nepstad and M. Førre, J. Phys. B: At. Mol. Opt. Phys. 44, 215003 (2011)

[Abstract]

Entanglement or separability: factorisation of density matrix algebra (Vol. 43 No. 1)

The separable quantum states form the blue double pyramid while the entangled states are located in the remaining tetrahedron cones

The separable quantum states form the blue double pyramid while the entangled states are located in the remaining tetrahedron cones

The present theoretical description of teleportation phenomena in sub-atomic scale physical systems proves that mathematical tools let free to choose how to separate out the constituting matter of a complex physical system by selectively analysing its so-called quantum state. That is the state in which the system is found when performing measurement, being either entangled or not. The state of entanglement corresponds to a complex physical system in a definite (pure) state, while its parts taken individually are not. This concept of entanglement used in quantum information theory applies when measurement in laboratory A (called Alice) depends on the definite measurement in laboratory B (called Bob), as both measurements are correlated. This phenomenon cannot be observed in larger-scale physical systems.

The findings show that the entanglement or separability of a quantum state -whether its sub-states are separable or not; i.e., whether Alice and Bob were able to find independent measurements - depends on the perspective used to assess its status.

A so-called density matrix is used to mathematically describe a quantum state. To assess this state's status, the matrix can be factorised in different ways, similar to the many ways a cake can be cut. The Vienna physicists have shown that by choosing a particular factorisation, it may lead to entanglement or separability; this can, however, only be done theoretically, as experimentally the factorisation is fixed by experimental conditions.

This is applied to model physical systems of quantum information including the theoretical study of teleportation, which is the transportation of a single quantum state. Other practical applications include gaining a better understanding of K-meson creation and decay in particle physics, and of the quantum Hall effect, where electric conductivity takes quantified values.

Entanglement or separability: the choice of how to factorise the algebra of a density matrix

W. Thirring, R.A. Bertlmanna, P. Köhler and H. Narnhofer, Eur. Phys. J. D, 64/2, 181 (2011)

[Abstract]