Nuclear physics with a medium-energy Electron-Ion Collider (Vol. 43 No. 5)

Possible realizations of a medium-energy EIC: MEIC at Jefferson Lab (top) and eRHIC at Brookhaven National Lab (bottom)

Possible realizations of a medium-energy EIC: MEIC at Jefferson Lab (top) and eRHIC at Brookhaven National Lab (bottom)

Quarks and gluons are the fundamental constituents of most of the matter in the visible Universe; Quantum Chromodynamics (QCD), a relativistic quantum field theory based on color gauge symmetry, describes their strong interactions. The understanding of the static and dynamical properties of the visible strongly interacting particles - hadrons - in terms of quarks and gluons is one of the most fascinating issues in hadron physics and QCD. In particular the exploration of the internal structure of protons and neutrons is one of the outstanding questions in experimental and theoretical nuclear and hadron physics. Impressive progress has been achieved recently.

The paper, clearly and concisely, addresses several of the issues related to the microscopic structure of hadrons and nuclei:

1. The three–dimensional structure of the nucleon in QCD, which involves the spatial distributions of quark and gluons, their orbital motion, possible correlations between spin and intrinsic motion;

2. The fundamental colour fields in nuclei (nuclear parton densities, shadowing, coherence effects, colour transparency);

3. The conversion of colour charge to hadrons (fragmentation, parton propagation through matter, in–medium jets).

The conceptual aspects of these questions are briefly reviewed and the measurements that would address them are discussed, with emphasis on the new information that could be obtained with experiments at an electron-ion collider collider (EIC). Such a medium–energy EIC could be realized at Jefferson Lab after the 12 GeV upgrade (MEIC), or at Brookhaven National Lab as the low–energy stage of eRHIC.

A. Accardi, V. Guzey, A. Prokudin and C. Weiss, ‘Nuclear physics with a medium-energy Electron-Ion Collider’, Eur. Phys. J. A (2012) 48, 92

[Abstract]

Time shift in the OPERA setup with muons in the LVD and OPERA detectors (Vol. 43 No. 5)

Distribution of the δt = tLVD − t*OPERA for corrected events. All the events of each year are grouped into one single point with the exception of 2008, which is subdivided into three periods.

Distribution of the δt = tLVD − t*OPERA for corrected events. All the events of each year are grouped into one single point with the exception of 2008, which is subdivided into three periods.

The halls of the INFN Gran Sasso Laboratory (LNGS) were designed by A. Zichichi and built in the 1980s, oriented towards CERN for experiments on neutrino beams. In 2006, the CERN Neutrinos to Gran Sasso (CNGS) beam started the search for tau-neutrino appearances in the muon-neutrino beam produced at CERN, using the OPERA detector built for this purpose.

In 2011, OPERA reported that the time of flight (TOF) of neutrinos measured on the 730km between CERN and LNGS was ~ 60ns shorter than that of light. Since the synchronisation of two clocks in different locations is a very delicate operation and a technical challenge, OPERA had to be helped by external experts in metrology.

This paper presents a much simpler and completely local check, by synchronising OPERA and LVD. Both detectors at LNGS are about 160m apart along the axis of the so-called “Teramo anomaly.” This structural anomaly in the Gran Sasso massif, established by LVD many years ago, lets through high-energy horizontal muons at the rate of one every few days, penetrating both experiments. The LVD-OPERA TOF measurement shows an offset of OPERA comparable with the claimed superluminal effect during the period in which the corresponding data were collected, and no offset in the periods before and after that data taking (when OPERA had corrected equipment malfunctions), as shown in the figure.

The result of this joint analysis is the first quantitative measurement of the relative time stability between the two detectors and provides a check that is completely independent of the TOF measurements of CNGS neutrino events, pointing to the existence of a possible systematic effect in the OPERA neutrino velocity analysis.

N.Yu. Agafonova plus 19 co-authors from LVD collaboration and 70 from OPERA collaboration, ‘Determination of a time shift in the OPERA setup using high-energy horizontal muons in the LVD and OPERA detectors’, Eur. Phys. J. Plus (2012) 127, 71

[Abstract]

Neutrons escaping to a parallel world? (Vol. 43 No. 5)

An anomaly in the behaviour of ordinary particles may point to the existence of mirror particles that could be candidates for dark matter responsible for the missing mass of the universe.

In the present paper, the authors hypothesised the existence of mirror particles to explain the anomalous loss of neutrons observed experimentally. The existence of such mirror matter had been suggested in various scientific contexts some time ago, including in the search for dark matter.

The authors re-analysed the experimental data obtained at the Institut Laue-Langevin, France. It showed that the loss rate of very slow free neutrons appeared to depend on the direction and strength of the magnetic field applied.

The authors believe it could be interpreted in the light of a hypothetical parallel world consisting of mirror particles. Each neutron would have the ability to transition into its invisible mirror twin, and back, oscillating from one world to the other. The probability of such a transition happening was predicted to be sensitive to the presence of magnetic fields, and could be detected experimentally.

This oscillation could occur within a timescale of a few seconds, according to the paper. The possibility of such a fast disappearance of neutrons—much faster than the ten minute long neutron decay—is subject to the condition that the earth possesses a mirror magnetic field that could be induced by mirror particles floating around in the galaxy as dark matter. Hypothetically, the earth could capture the mirror matter via some feeble interactions between ordinary particles and those from parallel worlds.

Z. Berezhiani and F. Nesti, ‘Magnetic anomaly in UCN trapping: signal for neutron oscillations to parallel world?’, Eur. Phys. J. C (2012) 72, 1974

[Abstract]

2D Raman mapping of stress and strain in Si waveguides (Vol. 43 No. 5)

Measured stress maps and lattice deformation sketches for waveguides: (a)-(b) without cladding (SOI0), (c)-(d) with tensile-stressing cladding (SOI1), (e)-(f) with SOI2). The arrows show the type of observed stress.

Measured stress maps and lattice deformation sketches for waveguides: (a)-(b) without cladding (SOI0), (c)-(d) with tensile-stressing cladding (SOI1), (e)-(f) with SOI2). The arrows show the type of observed stress.

In 27, 085009 (2012)this work, we characterized the mechanical stress of strained silicon waveguides by micro-Raman spectroscopy. We performed accurate measurements on the waveguide facet by using a confocal Raman microscope. The silicon-on-insulator waveguide is strained by depositing thin stressing silicon nitride (SiN) overlayers. The applied stress is varied by using different deposition techniques. By investigating the waveguides facets and modeling the measured Raman shifts, the local stress and strain are extracted. Thus, two-dimensional maps of stress distribution as a function of SiN deposition parameters are drawn showing different strain distributions depending on the deposition technique. Moreover, the results show a relevant role played by the buried oxide layer, which strongly affects the waveguides final stress. Hence, the combined actions of SiN and buried oxide layers deform the whole silicon core layer and cause an inhomogeneous strain distribution in the waveguides.

The strain inhomogeneity is fundamental to enable second order nonlinear optical devices because it breaks the silicon centro-symmetry yielding a huge second order nonlinear susceptibility (χ(2)). As we demonstrate in Nature Mater. 11 148-154 (2012), a χ(2) of several tens of picometers per volt is observed and a relation between the χ(2) values and the strain inhomogeneity and magnitude is revealed. Particularly, the largest conversion efficiency is observed in the waveguides where the micro-Raman spectroscopy shows the highest deformation. Currently, no explicit theory relating the strain with the χ(2) exists. Thus, the two-dimensional micro-Raman maps could be a valuable tool for experimental confirmation of future theories.

F. Bianco, K. Fedus, F. Enrichi, R. Pierobon, M. Cazzanelli, M. Ghulinyan, G. Pucker and L. Pavesi, ‘Two-dimensional micro-Raman mapping of stress and strain distributions in strained silicon waveguides which show second order optical nonlinearities‘, Semicond. Sci. Technol. (2012) 27, 085009

[Abstract]

Turbulent convection at the core of fluid dynamics (Vol. 43 No. 5)

Buoyant convection of a fluid subjected to thermal differences is a classical problem in fluid dynamics. Its importance is compounded by its relevance to many natural and technological phenomena. For example, in the Earth atmosphere, the study of thermal convection allows us to do weather forecasts and, on larger time and length-scales, climate calculations. In the oceans, where there are differences in temperature and salinity, turbulent convection drives deep-water currents. Geology and astrophysics are other areas where thermal convection has great impact. The simplest and most useful convection system is the Rayleigh-Bénard setup: a fluid in a container heated from below and cooled from above. In this classical system, the flow properties are determined by the scale and geometry of the container, the material properties of the fluid, and the temperature difference between top and bottom. The crux of the problem is to how to determine the rate of heat transfer in a given condition.

In this Colloquium paper, the authors review the recent experimental, numerical and theoretical advances in turbulent Rayleigh-Bénard convection. Particular emphasis is given to the physics and structure of the thermal and velocity boundary layers, which play a crucial role in governing the turbulent transport of heat and momentum in highly turbulent regimes. The authors moreover discuss some important extensions of Rayleigh-Bénard convection, such as the so-called non-Oberbeck-Boussinesq effects and address convection with phase changes.

F. Chillà and J. Schumacher, ‘New perspectives in turbulent Rayleigh-Bénard convection’, Eur. Phys. J. E (2012) 35: 58

[Abstract]

Spurious switching points in traded stock dynamics (Vol. 43 No. 5)

Trends in stock prices dynamics are notoriously difficult to interpret.

Trends in stock prices dynamics are notoriously difficult to interpret.

A selection of biased statistical subsets could yield an inaccurate interpretation of market behaviour and financial returns.

In the present paper, the authors rebuff the existence of power laws governing the dynamics of traded stock volatility, volume and intertrade times at times of stock price extrema, by demonstrating that what appeared as “switching points” in financial markets trends was due to a bias in the interpretation of market data statistics.

A prior study based on conditional statistics suggested that the local maxima of volatility and volume, and local minima of inter-trade times are akin to switching points in financial returns, reminiscent of critical transition points in physics. These local extrema were thought to follow approximate power laws.

To disentangle the effect of the conditional statistics on the market data trends, the authors compare stock prices traded on the financial market with a known statistical model of price featuring simple random behaviour, called Geometrical Brownian Motion (GBM). They demonstrate that “switching points” occur in the GBM model, too.

The authors find that, in the case of volatility data, the misguided interpretation of switching points stems from a bias in the selection of price peaks that imposes a condition on the statistics of price change, which skews its distributions. Under this bias, switching points in volume appear naturally due to the volume-volatility correlation. For the intertrade times, they show that extrema and power laws result from the bias introduced in the format of transaction data.

V. Filimonov and D. Sornette, ‘Spurious trend switching phenomena in financial markets’, Eur. Phys. J. B 85 , 155 (2012)

[Abstract]

Disentangling information from photons (Vol. 43 No. 5)

Disentangling information from photons (Image: © Peter Nguyen, iStockphoto)

Disentangling information from photons (Image: © Peter Nguyen, iStockphoto)

This work describes greater chances of accessing more reliable information on applications in quantum computing and cryptography. The authors have found a new method of reliably assessing the information contained in photon pairs used for applications in cryptography and quantum computing.

The findings are so robust that they enable access to the information even when the measurements on photon pairs are imperfect.

The authors focus on photon pairs described as being in a state of quantum entanglement: i.e., made up of many superimposed pairs of states. This means that these photon pairs are intimately linked by common physical characteristics such as a spatial property called orbital angular momentum, which can display a different value for each superimposed state.They rely on a tool capable of decomposing the photon pairs’ superimposed states onto the multiple dimensions of a Hilbert space, which is a virtual space described by mathematical equations. This approach allows them to understand the level of the photon pairs’ entanglement.

It is shown that the higher the degree of entanglement, the more accessible the information that photon pairs carry. This means that generating entangled photon pairs with a sufficiently high dimension—that is with a high enough number of decomposed photon states that can be measured—could help reveal their information with great certainty.

As a result, even an imperfect measurement of photons’ physical characteristics does not affect the amount of information that can be gained, as long as the level of entanglement was initially strong. These findings could lead to quantum information applications with greater resilience to errors and a higher information density coding per photon pair. They could also lead to cryptography applications where fewer photons carry more information about complex quantum encryption keys.

F.M. Miatto, T. Brougham and A.M. Yao, ‘Cartesian and polar Schmidt bases for down-converted photons’, Eur. Phys. J. D (2012) 66, 183

[Abstract]

Attosecond control of electron correlation (Vol. 43 No. 5)

The photo-recombination dipole phase ΔΦ in a correlation-assisted channel is proportional to electric field strength FIR at the time of recombination tr.

The photo-recombination dipole phase ΔΦ in a correlation-assisted channel is proportional to electric field strength FIR at the time of recombination tr.

Electron correlation is ubiquitous in one-photon ionization and its time reverse, photo-recombination. It redistributes transition probabilities between open ionization channels in an atom or molecule. The photo-ionization and photo-recombination cross-sections are commonly assumed to be a fixed property of the target, impervious to experimental manipulation.

This article develops an analytical model of correlated photo-ionization and photo-recombination in the presence of a strong, near-infrared (IR) laser field. It shows that the characteristic time of the electron-electron interaction differs slightly from the time of the ionization (or recombination). As the result, correlation channels acquire an additional phase, proportional to the instantaneous IR laser electric field. Interferences between the direct and correlation channels then lead to either suppression or enhancement of overall cross-sections. These interferences are under direct experimental control and can be used to adjust probabilities of ionization and recombination.

Numerical estimates suggest that the desired control conditions are attainable in strong-field experiments. If the prediction is confirmed by experiment, it will open new avenues for strong-field investigations of electron correlation and for the design of high-harmonic radiation sources.

S. Patchkovskii, O. Smirnova and M. Spanner, ‘Attosecond control of electron correlations in one-photon ionization and recombination’, J. Phys. B: At. Mol. Opt. Phys. (2012) 45, 131002

[Abstract]

The “inertia of heat” concept revisited (Vol. 43 No. 5)

What is the general relativistic version of the Navier-Stokes-Fourier dissipative hydrodynamics? Surprisingly, no satisfactory answer to this question is known today. Eckart's early solution [Eckart, Phys. Rev. (1940) 58, 919], is considered outdated on many grounds: the instability of its equilibrium states, ill-posed initial-value formulation, inconsistency with linear irreversible thermodynamics, etc. Although alternative theories have been proposed recently, none appears to have won the consensus.

This paper reconsiders the foundations of Eckart's theory, focusing on its main peculiarity and simultaneous difficulty: the “inertia of heat” term in the constitutive relation for the heat flux, which couples temperature to acceleration. In particular, it shows that this term arises only if one insists on defining the thermal diffusivity independently of the gravitational field. It is argued that this is not a physically sensible approach, because gravitational time dilation implies that the diffusivity actually varies in space. In a nutshell, where time runs faster, thermal diffusion also runs faster. It is proposed that this is the physical meaning of the “inertia of heat” concept, and that such an effect should be expected in any theory of dissipative hydrodynamics that is consistent with general relativity.

M. Smerlak, ‘On the inertia of heat’, Eur. Phys. J. Plus (2012) 127, 72

[Abstract]

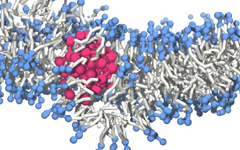

Translocation of polymers through lipid bilayers (Vol. 43 No. 5)

Snapshots of a polymer chain diffusing through the bilayer at the critical hydrophobicity. Strong perturbations of the lipid ordering can be observed during the adsorption/translocation event (middle).

Snapshots of a polymer chain diffusing through the bilayer at the critical hydrophobicity. Strong perturbations of the lipid ordering can be observed during the adsorption/translocation event (middle).

Lipid bilayers emerge by self-organization of amphiphilic molecules and are the essential component of membranes of living cells. An important task of them is the selective exchange of substances between the cell and its environment. This becomes particularly interesting for delivering foreign molecules and RNA into the cell. In the classical view of cell biology static structures such as pores and channels formed by specific proteins control the translocation of molecules.

In this work we show that there exists a straightforward mechanism for translocation of polymers through lipid bilayers if the monomers of the chain show a certain balance of hydrophobic and hydrophilic strength. Using the bond fluctuation method with explicit solvent to simulate the self-organized lipid bilayer and the polymer chain we show that the chain is adsorbed by the bilayer at a critical hydrophobicity of the monomers. At this point all monomers have an intermediate degree of hydrophobicity, which is large enough to overcome the insertion barrier of the ordered lipids, but still small enough to avoid trapping in the core of the bilayer. In a narrow range around this critical hydrophobicity the chain can almost freely penetrate through the model membrane whose hydrophobic core becomes energetically transparent here. Our simulations also allow calculate the permeability of the membrane with respect to the solvent. We show that the permeability is strongly increased close to the critical hydrophobicity suggesting that here the perturbation of the membrane patch around the adsorbed chain is highest.

J-U. Sommer, M. Werner and V. A. Baulin, ‘Critical adsorption controls translocation of polymer chains through lipid bilayers and permeation of solvent’, EPL, (2012) 98, 18003

[Abstract]

High intensity 6He beam production (Vol. 43 No. 5)

ISOLDE production unit equipped with a spallation neutron source along the target oven. Fast release of 6He anti-neutrino emitters, produced by a BeO target with the CERN’s proton beam.

ISOLDE production unit equipped with a spallation neutron source along the target oven. Fast release of 6He anti-neutrino emitters, produced by a BeO target with the CERN’s proton beam.

Nuclear structures of short-lived radioisotopes are nowadays investigated in large scale facilities based on in-flight fragmentation or isotope separation online (ISOL) methods. The ISOL technique has been constantly extended at CERN-ISOLDE, where 1.4GeV protons are exploited by physicists to create radioisotopes in thick materials; these exotic nuclei are released and pumped online into an ion source, producing a secondary beam which is further selected in a magnetic mass spectrometer before post-acceleration.

Ten years ago a proposal to inject suitable ISOL beams into the CERN accelerator complex and to store these isotopes in a decay ring with straight sections was proposed for a ”Β-beam facility”; intense sources of pure electron neutrinos - emitted when such isotopes decay - are directed towards massive underground detectors for fundamental studies such as observation of neutrino flavour oscillations and CP violation.

Our paper reports on the experimental production of the anti-neutrino emitter 6He. We use a two-step reaction in which the proton beam interacts with a tungsten neutron spallation source. The emitted neutrons intercept a BeO target to produce 9Be(n,a)6He reactions. The neutron field was simulated by Monte-Carlo codes such as Fluka and experimentally measured. The large predicted 6He production rates were also experimentally verified. Fast 6He diffusion, driven by the selection of a suitable BeO material, could be demonstrated, leading to the highest 6He beam rates ever achieved at ISOLDE. These results provide a firm experimental confirmation that the Β-beam will be able to deliver enough anti-neutrino rates using a neutron spallation source similar to ISIS-RAL (UK). This work is now being completed by the experimental validation of the 18Ne neutrino source design.

T. Stora and 11 co-authors, ‘A high intensity 6He beam for the β-beam neutrino oscillation

Facility’, EPL (2012) 98, 32001

[Abstract]

Improved quantum information motion control (Vol. 43 No. 5)

Sketch map of the double quantum dot (two horizontal lines) coupled with the nanomechanical resonator (rectangle).

Sketch map of the double quantum dot (two horizontal lines) coupled with the nanomechanical resonator (rectangle).

A new model simulates closer control over the transport of information carrying electrons under specific external vibration conditions. The present article developes a new method for handling the effect of the interplay between vibrations and electrons on electronic transport, which could have implications for quantum computers due to improvements in the transport of discrete amounts of information, known as qubits, encoded in electrons.

The authors create an electron transport model to assess electrons’ current fluctuations based on a double quantum dot (DQD) subjected to quantized modes of vibration, also known as phonons, induced by a nanomechanical resonator. Unlike previous studies, this work imposes arbitrary strong coupling regimes between electrons and phonons.

They successfully control the excitations of the phonons without impacting the transport of quantum information. They decouple the electron-phonon interaction by inducing resonance frequency of phonons. When the energy excess between the two quantum dots of the DQD system is sufficient to create an integer number of phonons, electrons can reach resonance and tunnel from one quantum dot to the other.

As electron-phonon coupling becomes even stronger, the phenomenon of phonon scattering represses electron transport and confines them, suggesting that tuning the electron-phonon coupling, could make a good quantum switch to control the transport of information in quantum computers.

C. Wang, J. Ren, B. Li and Q-H. Chen, ‘Quantum transport of double quantum dots coupled to an oscillator in arbitrary strong coupling regime’, Eur. Phys. J. B (2012) 85, 110

[Abstract]