Combining experimental data to test models of new physics that explain dark matter (Vol. 49, No. 1)

The most statistically consistent and versatile tool to date is designed to gain insights into dark matter from models that extend the standard model of particle physics, rigorously comparing them with the latest experimental data.

In chess, a gambit refers to a move in which a player risks one piece to gain an advantage. The quest to explain dark matter, a missing ingredient from the minimal model that can describe the fundamental particles we have observed (referred to as the standard model of particle physics), has left many physicists eager to gain an advantage when comparing theoretical models to as many experiments as possible. In particular, maintaining calculation speed without sacrificing the number of parameters involved is a priority. Now the GAMBIT collaboration, an international group of physicists, has just published a series of papers that offer the most promising approach to date to understanding dark matter. The collaboration has developed the eponymous GAMBIT software, designed to combine the growing volume of experimental data from multiple sources—a process referred to as a global fit—in a statistically consistent manner. Such data typically comes from astrophysical observations and experiments that collide subatomic particles, such as those involving the Large Hadron Collider (LHC), based at CERN in Geneva, Switzerland.

The GAMBIT Collaboration, Status of the scalar singlet dark matter model, Eur. Phys. J. C 77, 568 (2017)

[Abstract]

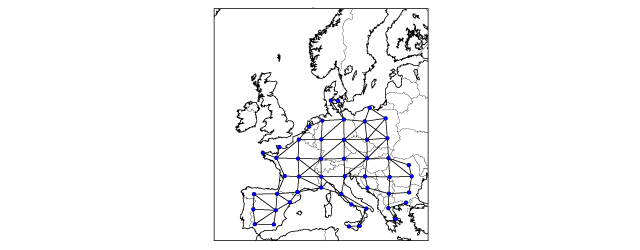

Spatial scales in electricity system modelling (Vol. 49, No. 1)

Models of large-scale electricity systems often only incorporate a certain level of spatial detail regarding the distribution of generation and consumption. This coarse-graining due to constrained availability of data or computational limitations also applies to the representation of the power grid. In particular, the network topology as well as the load and generation patterns below a given spatial scale have to be aggregated into representative system nodes. But how does this coarse-graining affect what simulations tell us about the system? This important question has been addressed using a simplified, but spatially-detailed model of the European electricity system with a high share of renewable generation. Applying a clustering algorithm, the transmission needs of the system are derived on various spatial scales. Surprisingly, it turns out that the transmission infrastructure costs only vary weakly under the coarse-graining procedure. This can be understood using an analytical approach, which yields approximate spatial scaling laws for measures of transmission infrastructure in electricity system models.

M. Schäfer, S. B. Siggaard, K. Zhu, C. R. Poulsen and M. Greiner, Scaling of transmission capacities in coarse-grained renewable electricity networks, EPL 119, 38004 (2017)

[Abstract]

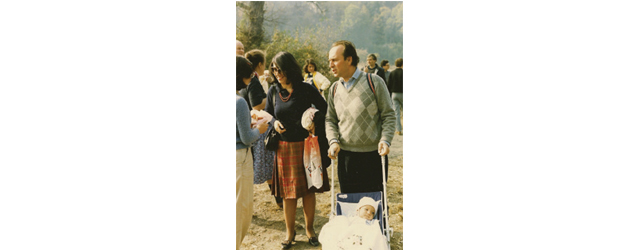

How theoretical particle physicists made history with the Standard Model (Vol. 49, No. 1)

The personal recollections of a physicist involved in developing a reference model in particle physics, called the Standard Model, particularly in Italy.

Understanding the Universe requires first understanding its building blocks, a field covered by particle physics. Over the years, an elegant model of particle physics, dubbed the Standard Model, has emerged as the main point of reference for describing the fundamental components of matter and their interactions. The Standard Model is not confined to particle physics; it also provides us a guide to understanding phenomena that take place in the Universe at large, down to the first moments of the Big Bang, and it sets the stage for a novel cosmic problem, namely the identification of dark matter. Placing the Standard Model in a historical context sheds valuable light on how the theory came to be. In a remarkable paper published recently, Luciano Maiani shares his personal recollections with Luisa Bonolis. During an interview recorded over several days in March 2016, Maiani outlines the role of those researchers who were instrumental in the evolution of theoretical particle physics in the years when the Standard Theory was developed.

L. Maiani and L. Bonolis, The Charm of Theoretical Physics (1958-1993) Oral History Interview, Eur. Phys. J. H, (2017)

[Abstract]

Observational constraints and astrophysical uncertainties in WIMP detection (Vol. 49, No. 1)

Weakly Interacting Massive Particles (WIMPs) are one of the best candidates for the exotic dark matter that makes up 80% of the matter in the Universe. They are predicted to exist in extensions of the standard model of particle physics and would be produced in the Big Bang in the right amount to account for the dark matter. Lab-based direct detection experiments aim to detect WIMPs via their rare interactions with normal matter. The signals expected in these experiments depend on the local dark matter density and velocity distribution. An accurate understanding of these quantities is therefore required to obtain reliable constraints on the WIMP particle physics properties, i.e. its mass and interaction cross sections. This paper reviews the current status of observational constraints on the local dark matter distribution and also numerical simulations of Milky Way-like galaxies, before discussing the effects of uncertainties in these quantities on the experimental signals. It concludes with an overview of various methods for handling these astrophysical uncertainties, and hence obtaining accurate constraints on the WIMP mass and cross-sections from current and future experimental data.

A. M. Green, Astrophysical uncertainties on the local dark matter distribution and direct detection experiments, J. Phys. G: Nucl. Part. Phys. 44, 084001 (2017)

[Abstract]

Weyl states mean magnetic protectorates (Vol. 49, No. 1)

Electrons in conductors are basically free particles subject to residual collisions, a picture first proposed by Paul Drude in 1900 and later developed by Arnold Sommerfeld in 1927 with quantum concepts. The Drude-Sommerfeld assumption that in between any two collisions the electrons move freely is only approximate since electrons transport charge. While in motion they give rise to an electric current that creates a magnetic field able to influence each other’s trajectories. In the Drude-Sommerfeld scenario this small magnetic field interaction among electrons is simply discarded. However this magnetic field can lead to topologically protected states in case the electrons move in a layer no matter its strength assuming residual collisions. The magnetic field streamlines, created by the electronic motion, form loops that pierce the layer twice. These magnetic field loops are a consequence that electrons occupy Weyl states and yet live in the parabolic band of the Drude-Sommerfeld scenario. Indeed this residual magnetic interaction brings these Weyl states to a higher energy since they acquire magnetic energy. Nevertheless they fall in magnetic protectorates forbid to decay into a lower energy state.

M. M. Doria and A. Perali, Weyl states and Fermi arcs in parabolic bands, EPL 119, 21001 (2017)

[Abstract]

Resolving tension on the surface of polymer mixes (Vol. 49, No. 1)

Credit iker-urteaga via Unsplash

A new study finds a simple formula to explain what happens on the surface of melted mixes of short- and long-strand polymers.

Better than playing with Legos, throwing polymer chains of different lengths into a mix can yield surprising results. In a new study published recently, physicists focus on how a mixture of chemically identical chains into a melt produces unique effects on their surface. That’s because of the way short and long polymer chains interact with each other. In these kinds of melts, polymer chain ends have, over time, a preference for the surface. Now, the authors have studied the effects of enriching long-chain polymer melts with short-chain polymers. They performed numerical simulations to explain the decreased tension on the surface of the melt, due to short chains segregating at the surface over time as disorder grows in the melt. They found an elegant formula to calculate the surface tension of such melts, connected to the relative weight of their components.

P. Mahmoudi and M.W. Matsen, Entropic segregation of short polymers to the surface of a polydisperse melt, Eur. Phys. J. E 40, 85 (2017)

[Abstract]

How small does your rice pudding need to get when stirring jam into it? (Vol. 49, No. 1)

New study shows that two seemingly diverging theories of ever-increasing disorder, known as entropy, can be tested against each other experimentally in the smallest possible systems.

Have you ever tried turning the spoon back after stirring jam into a rice pudding? It never brings the jam back into the spoon. This ever-increasing disorder is linked to a notion called entropy. Entropy is of interest to physicists studying the evolution of systems made up of multiple identical elements, like gas. Yet, how the states in such systems should be counted is a bone of contention. The traditional view developed by one of the fathers of statistical mechanics, Ludwig Boltzmann — who worked on a very large number of elements — is opposed to the seemingly disjointed theoretical perspective of another founding scientists of the discipline, Willard Gibbs, who describes systems with a very small number of elements. In a new study published recently, the author demystifies this clash between theories by analysing the practical consequences of Gibbs’ definition in two systems of a well-defined size. He speculates about the possibility that, for certain quantities, the differences resulting from Boltzmann's and Gibbs' approach can be measured experimentally.

L. Ferrari, Comparing Boltzmann and Gibbs definitions of entropy in small systems, Eur. Phys. J. Plus, (2017)

[Abstract]

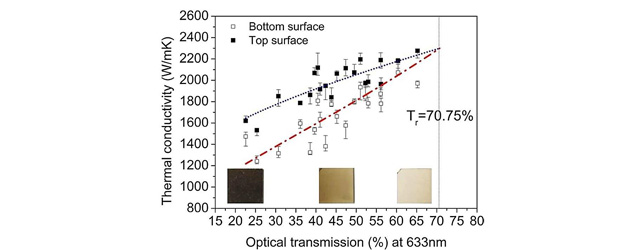

Polycrystalline diamond: thermal conductivity versus optical defects (Vol. 49, No. 1)

With development of technologies, diamond can be produced in big amount and various qualities. With the continuing advances in production of synthetic diamonds, diamond is feasible for more applications such as heat sink for electronics. In this work, the authors have worked together with one of the largest synthetic diamond company (IIa Technologies Pte. Ltd. Singapore). The authors have systematically studied the influence of various optical active defects on thermal conductivities for synthetic polycrystalline diamonds. It is found that the top surface, which shows higher thermal conductivity compared to the bottom surface (where growth nucleation started), also shows lower densities of defects than the bottom surface. The influence from non-diamond carbon phase and C-H stretch for top surface is not significant because of the low concentration of these defects on the growth surface. However the heat transport is still limited by the presence of Ns0 defect, which is the main contributor lowering the thermal conductivities on the top surfaces. For the bottom surface, non-diamond carbon phase, Si vacancy, C-H stretch and Ns0 defects all lead to an obvious reduction in the thermal conductivity. Furthermore, a well fitted equation was given to quickly estimate the thermal conductivity by optical transmission, and the equation was demonstrated to be valid at any wavelength in visible region. This enables a fast and reasonable estimation of thermal conductivity by visual inspection.

Q. Kong, A. Tarun, C. M. Yap, S. Xiao, K. Liang, B. K. Tay, D. S. Misra, Influence of optically active defects on thermal conductivity of Polycrystalline diamond, Eur. Phys. J. Appl. Phys. 80, 20102 (2017)

[Abstract]

Swarm-based simulation strategy proves significantly shorter (Vol. 49, No. 1)

New method creates time-efficient way of computing models of complex systems reaching equilibrium.

When the maths cannot be done by hand, physicists modelling complex systems, like the dynamics of biological molecules in the body, need to use computer simulations. Such complicated systems require a period of time before being measured, as they settle into a balanced state. The question is: how long do computer simulations need to run to be accurate? Speeding up processing time to elucidate highly complex study systems has been a common challenge. And it cannot be done by running parallel computations. That’s because the results from the previous time lapse matters for computing the next time lapse. Now, the authors have developed a practical partial solution to the problem of saving time when using computer simulations that require bringing a complex system into a steady state of equilibrium and measuring its equilibrium properties. These findings have been recently published. One solution is to run multiple copies of the same simulation. In this study, the authors examine an ensemble of 1,000 runs—dubbed a swarm. This approach reduces the overall time required to get the answer to estimating the value of the system at equilibrium.

S. M.A. Malek, R. K. Bowles, I. Saika-Voivod, F. Sciortino, and P. H. Poole, Swarm relaxation: Equilibrating a large ensemble of computer simulations, Eur. Phys. J. E, 40, 98 (2017)

[Abstract]

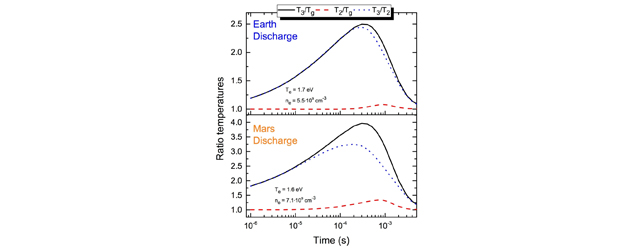

Making oxygen on Mars thanks to plasma technology (Vol. 49, No. 1)

Sending a manned mission to Mars is one of the next major steps in space exploration. Creating a breathable environment, however, is a substantial challenge. Plasma technology could hold the key to creating a sustainable oxygen supply on the red planet, by converting carbon dioxide directly from the Martian atmosphere.

Low-temperature plasmas are one of the best media for CO2 decomposition, both by direct electron impact and by transferring electron energy into vibrational excitation. It is shown that Mars has excellent conditions for In-Situ Resource Utilisation (ISRU) by plasma. Indeed, the pressure and temperature ranges in the Martian atmosphere mean non-thermal plasmas can be used to produce oxygen efficiently. Besides the 96% carbon dioxide atmosphere, the cold surrounding atmosphere may induce a stronger vibrational effect than that achievable on Earth.

The method offers a twofold solution for a manned mission to Mars. Not only would it provide a stable, reliable supply of oxygen, but a source of fuel as well, as oxygen and carbon monoxide have been proposed to be used as a propellant mixture in rocket vehicles.

V. Guerra and 8 co-authors, The case for in situ resource utilisation for oxygen production on Mars by non-equilibrium plasmas, Plasma Sources Sci. Technol. 26, 11LT01 (2017)

[Abstract]

Droplet explosion by shock waves, relevant to nuclear medicine (Vol. 49, No. 1)

An arrow shooting through an apple, makes for a spectacular explosive sight in slow motion. Similarly, energetic ions passing through liquid droplets induce shock waves, which can fragment the droplets. In a study published recently, the authors have proposed a solution to observe the predicted ion-induced shock waves. They believe these can be identified by observing the way incoming ions fragment liquid droplets into multiple smaller droplets. The discovery of such shock waves would change our understanding of the nature of radiation damage with ions to cancerous tumour. This matters for the optimisation of ion-beam cancer therapy, which requires a thorough understanding of the relation between the physical characteristics of the incoming ion beam and its effects on biological tissues. The predicted shock waves significantly contribute to the thermomechanical damage deliberately inflicted on tumour tissue. Specifically, the collective flow intrinsic to the shock waves helps to propagate biologically harmful reactive species, such as free radicals, stemming from the ions. This mechanism increases the volume of tumour cells exposed to reactive species.

E. Surdutovich, A. Verkhovtsev and A. V. Solov’yov, Ion-impact-induced multifragmentation of liquid droplets, Eur. Phys. J. D 71, 285 (2017)

[Abstract]

Temperature gradients influencing the hysteresis of ferromagnetic nanostructures (Vol. 49, No. 1)

For future data storage technology, in which downscaling of magnetic bit unit sizes is crucial, heat-assisted magnetic recording (HAMR) is one key technology to ensure the writability for magnetic bits. It relies on a laser heating pulse to lower the coercive field HC of the magnetic bit unit. Here, we investigated the temperature- and temperature gradient-dependent switching behaviour by HC measurements of individual, single-domain CoNi and FeNi alloy nanowires via measurements of the magneto-optical Kerr effect. While the switching field generally decreased under isothermal conditions at elevated temperatures, temperature gradients (ΔT) along the nanowires led to an increased switching field up to 15 % for ΔT = 300 K in Co39Ni61 nanowires. We attribute this enhancement to a stress-induced contribution of the magneto-elastic anisotropy that counteracts the thermally assisted magnetization reversal process. Our results demonstrate that a careful distinction between locally elevated temperatures and temperature gradients has to be made in future HAMR devices.

A.-K. Michel and 12 co-authors, Temperature gradient-induced magnetization reversal of single ferromagnetic nanowires, J. Phys. D: Appl. Phys. 50, 494007 (2017)

[Abstract]

Quantum manipulation power for quantum information processing gets a boost (Vol. 49, No. 1)

Improving the efficiency of quantum heat engines involves reducing the number of photons in a cavity, ultimately impacting quantum manipulation power.

Traditionally, heat engines produce heat from the exchange between high-temperature and low-temperature baths. Now, imagine a heat engine that operates at quantum scale, and a system made up of an atom interacting with light (photons) confined in a reflective cavity of sub-atomic dimensions. This setup can either be at a high or low temperature, emulating the two baths found in conventional heat engines. Controlling the parameters influencing how such quantum heat engine models work could dramatically increase our power to manipulate the quantum states of the coupled atom-cavity, and accelerate our ability to process quantum information. In order for this to work, we have to find new ways of improving the efficiency of quantum heat engines. In a study published recently, the authors show methods for controlling the output power and efficiency of a quantum thermal engine based on the two-atom cavity.

K.W. Sun, R. Li and G.-F. Zhang, A quantum heat engine based on the Tavis-Cummings model, Eur. Phys. J. D 71, 230 (2017)

[Abstract]

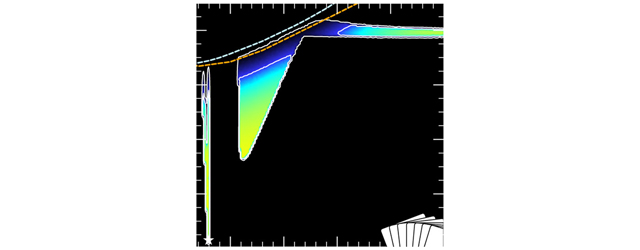

From experiment to evaluation, the case of n+238U (Vol. 49, No. 1)

Evaluated nuclear data represent the bridge between experimental and theoretical achievements and final user applications. The complex evolution from experimental data towards final data libraries forms the cornerstone of any evaluation process. Since more than 90% of the fuel in most nuclear power reactors consists of 238U, the respective neutron induced cross sections are of primary importance towards accurate neutron transport calculations. Despite this significance, the relevant experimental data for the 238U(n,γ) capture reaction have only recently provided for a consistent description of the resonance region. In this work, the 238U(n,γ) average cross sections were evaluated in the energy region 5-150 keV, based on recommendations by the IAEA Neutron Standards projects and experimental data not included in previous evaluations.

A least squares analysis was applied using exclusively microscopic data. This resulted in average cross sections with uncertainties of less than 1%, fulfilling the requirements on the High Priority Request List maintained by the OECD-NEA. The parameterisation in terms of average resonance parameters maintained consistency with results of optical model and statistical calculations. The final deliverable is an evaluated data file for 238U, which was validated by independent experimental data.

I. Sirakov, R. Capote, O. Gritzay, H.I. Kim, S. Kopecky, B. Kos, C. Paradela, V.G. Pronyaev, P. Schillebeeckx, and A. Trkov, Evaluation of cross sections for neutron interactions with 238U in the energy region between 5 keV and 150 keV, Eur. Phys. J. A 53, 199 (2017)

[Abstract]